Reference no: EM131236304

Text Book - Algorithm Design by Jon Kleinberg and Eva Tardos

Chapter 13 - Randomized Algorithms

Exercises

Q1. 3-Coloring is a yes/no question, but we can phrase it as an optimization problem as follows.

Suppose we are given a graph G = (V, E), and we want to color each node with one of three colors, even if we aren't necessarily able to give different colors to every pair of adjacent nodes. Rather, we say that an edge (u, v) is satisfied if the colors assigned to u and v are different.

Consider a 3-coloring that maximizes the number of satisfied edges, and let c∗ denote this number. Give a polynomial-time algorithm that produces a 3-coloring that satisfies at least 2/3c∗ edges. If you want, your algorithm can be randomized; in this case, the expected number of edges it satisfies should be at least 2/3c∗.

Q2. Consider a county in which 100,000 people vote in an election. There are only two candidates on the ballot: a Democratic candidate (denoted D) and a Republican candidate (denoted R). As it happens, this county is heavily Democratic, so 80,000 people go to the polls with the intention of voting for D, and 20,000 go to the polls with the intention of voting for R.

However, the layout of the ballot is a little confusing, so each voter, independently and with probability 1100, votes for the wrong candidate-that is, the one that he or she didn't intend to vote for. (Remember that in this election, there are only two candidates on the ballot.)

Let X denote the random variable equal to the number of votes received by the Democratic candidate D, when the voting is conducted with this process of error. Determine the expected value of X, and give an explanation of your derivation of this value.

3. In Section 13.1, we saw a simple distributed protocol to solve a particular contention-resolution problem. Here is another setting in which randomization can help with contention resolution, through the distributed construction of an independent set.

Suppose we have a system with n processes. Certain pairs of processes are in conflict, meaning that they both require access to a shared resource. In a given time interval, the goal is to schedule a large subset S of the processes to run-the rest will remain idle-so that no two conflicting processes are both in the scheduled set S. We'll call such a set S conflict-free.

One can picture this process in terms of a graph G = (V, E) with a node representing each process and an edge joining pairs of processes that are in conflict. It is easy to check that a set of processes S is conflict-free if and only if it forms an independent set in G. This suggests that finding a maximum-size conflict-free set S, for an arbitrary conflict G, will be difficult (since the general Independent Set Problem is reducible to this problem). Nevertheless, we can still look for heuristics that find a reasonably large conflict-free set. Moreover, we'd like a simple method for achieving this without centralized control: Each process should communicate with only a small number of other processes and then decide whether or not it should belong to the set S.

We will suppose for purposes of this question that each node has exactly d neighbors in the graph G. (That is, each process is in conflict with exactly d other processes.)

(a) Consider the following simple protocol.

Each process Pi independently picks a random value xi; it sets xi to 1with probability ½ and sets xi to 0 with probability ½. It then decides to enter the set S if and only if it chooses the value 1, and each of the processes with which it is in conflict chooses the value 0.

Prove that the set S resulting from the execution of this protocol is conflict-free. Also, give a formula for the expected size of S in terms of n (the number of processes) and d (the number of conflicts per process).

(b) The choice of the probability ½ in the protocol above was fairly arbitrary, and it's not clear that it should give the best system performance. A more general specification of the protocol would replace the probability ½ by a parameter p between 0 and 1, as follows.

Each process Pi independently picks a random value xi; it sets xi to 1 with probability p and sets xi to 0 with probability 1- p. It then decides to enter the set S if and only if it chooses the value 1, and each of the processes with which it is in conflict chooses the value 0.

In terms of the parameters of the graph G, give a value of p so that the expected size of the resulting set S is as large as possible. Give a formula for the expected size of S when p is set to this optimal value.

Q4. A number of peer-to-peer systems on the Internet are based on overlay networks. Rather than using the physical Internet topology as the net-work on which to perform computation, these systems run protocols by which nodes choose collections of virtual "neighbors" so as to define a higher-level graph whose structure may bear little or no relation to the underlying physical network. Such an overlay network is then used for sharing data and services, and it can be extremely flexible compared with a physical network, which is hard to modify in real time to adapt to changing conditions.

Peer-to-peer networks tend to grow through the arrival of new participants, who join by linking into the existing structure. This growth process has an intrinsic effect on the characteristics of the overall network. Recently, people have investigated simple abstract models for network growth that might provide insight into the way such processes behave, at a qualitative level, in real networks.

Here's a simple example of such a model. The system begins with a single node v1. Nodes then join one at a time; as each node joins, it executes a protocol whereby it forms a directed link to a single other node chosen uniformly at random from those already in the system. More concretely, if the system already contains nodes v1, v2,..., vk-1 and node vk wishes to join, it randomly selects one of v1, v2,..., vk-1 and links to this node.

Suppose we run this process until we have a system consisting of nodes v1, v2,..., vn; the random process described above will produce a directed network in which each node other than v1 has exactly one outgoing edge. On the other hand, a node may have multiple incoming links, or none at all. The incoming links to a node vj reflect all the other nodes whose access into the system is via vj; soif vj has many incoming links, this can place a large load on it. To keep the system load-balanced, then, we'd like all nodes to have a roughly comparable number of incoming links. That's unlikely to happen here, however, since nodes that join earlier in the process are likely to have more incoming links than nodes that join later. Let's try to quantify this imbalance as follows.

(a) Given the random process described above, what is the expected number of incoming links to node vj in the resulting network? Give an exact formula in terms of n and j, and also try to express this quantity asymptotically (via an expression without large summations) using Θ(·) notation.

(b) Part (a) makes precise a sense in which the nodes that arrive early carry an "unfair" share of the connections in the network. Another way to quantify the imbalance is to observe that, in a run of this random process, we expect many nodes to end up with no incoming links.

Give a formula for the expected number of nodes with no incoming links in a network grown randomly according to this model.

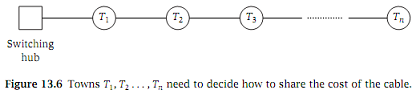

Q5. Out in a rural part of the county somewhere, n small towns have decided to get connected to a large Internet switching hub via a high-volume fiber-optic cable. The towns are labeled T1, T2,..., Tn, and they are all arranged on a single long highway, so that town Ti is i miles from the switching hub (See Figure 13.6).

Now this cable is quite expensive; it costs k dollars permile, resulting in an overall cost of kn dollars for the whole cable. The towns get together and discuss how to divide up the cost of the cable.

First, one of the town's way out at the far end of the highway makes the following proposal.

Proposal A. Divide the cost evenly among all towns, so each pays k dollars.

There's some sense in which Proposal A is fair, since it's as if each town is paying for the mile of cable directly leading up to it.

But one of the towns very close to the switching hub objects, pointing out that the faraway towns are actually benefiting from a large section of the cable, whereas the close-in towns only benefit from a short section of it. So they make the following counterproposal.

Proposal B. Divide the cost so that the contribution of town Ti is proportional to i, its distance from the switching hub.

One of the other towns very close to the switching hub points out that there's another way to do a non proportional division that is also natural. This is based on conceptually dividing the cable into n equal-

length "edges" e1,..., en, where the first edge e1 runs from the switching hub to T1, and the ith edge ei (i > 1) runs from Ti-1 to Ti. Now we observe that, while all the towns benefit from e1, only the last town benefits from en. So they suggest.

Proposal C. Divide the cost separately for each edge ei. The cost of ei should be shared equally by the towns Ti, Ti+1,..., Tn, since these are the towns "downstream" of ei.

So now the towns have many different options; which is the fairest? To resolve this, they turn to the work of Lloyd Shapley, one of the most famous mathematical economists of the 20th century. He proposed what is now called the Shapley value as a general mechanism for sharing costs or benefits among several parties. It can be viewed as determining the "marginal contribution" of each party, assuming the parties arrive in a random order.

Here's how it would work concretely in our setting. Consider an ordering O of the towns, and suppose that the towns "arrive" in this order. The marginal cost of town Ti in order O is determined as follows. If Ti is first in the order O, then Ti pays ki, the cost of running the cable all the way from the switching hub to Ti. Otherwise, look at the set of towns that come before Ti in the order O, and let Tj be the farthest among these towns from the switching hub. When Ti arrives, we assume the cable already reaches out to Tj but no farther. So if j > i (Tj is farther out than Ti), then the marginal cost of Ti is 0, since the cable already runs past Ti on its way out to Tj. On the other hand, if j < i, then the marginal cost of Ti is k(i - j): the cost of extending the cable from Tj out to Ti.

Now, let Xi be the random variable equal to the marginal cost of town Ti when the order O is selected uniformly at random from all permutations of the towns. Under the rules of the Shapley value, the amount that Ti should contribute to the overall cost of the cable is the expected value of Xi.

The question is: Which of the three proposals above, if any, gives the same division of costs as the Shapley value cost-sharing mechanism? Give a proof for your answer.

Q6. One of the (many) hard problems that arises in genome mapping can be formulated in the following abstract way. We are given a set of n markers {μ1,..., μn}-these are positions on a chromosome that we are trying to map-and our goal is to output a linear ordering of these markers. The output should be consistent with a set of k constraints, each specified by a triple (μi, μj, μk), requiring that μj lie between μi and μk in the total ordering that we produce. (Note that this constraint does not specify which of μi or μk should come first in the ordering, only that μj should come between them.)

Now it is not always possible to satisfy all constraints simultaneously, so we wish to produce an ordering that satisfies as many as possible. Unfortunately, deciding whether there is an ordering that satisfies at least k' of the k constraints is an NP-complete problem (you don't have to prove this.)

Give a constant α> 0 (independent of n) and an algorithm with the following property. If it is possible to satisfy k∗ of the constraints, then the algorithm produces an ordering of markers satisfying at least αk∗ of the constraints. Your algorithm may be randomized; in this case it should produce an ordering for which the expected number of satisfied constraints is at least αk∗.

Q7. In Section 13.4, we designed an approximation algorithm to within a factor of 7/8 for the MAX 3-SAT Problem, where we assumed that each clause has terms associated with three different variables. In this problem, we will consider the analogous MAX SAT Problem: Given a set of clauses C1,..., Ck over a set of variables X = {x1,..., xn}, find a truth assignment satisfying as many of the clauses as possible. Each clause has at least one term in it, and all the variables in a single clause are distinct, but otherwise we do not make any assumptions on the length of the clauses: There may be clauses that have a lot of variables, and others may have just a single variable.

(a) First consider the randomized approximation algorithm we used for MAX 3-SAT, setting each variable independently to true or false with probability 1/2 each. Show that the expected number of clauses satisfied by this random assignment is at least k/2, that is, at least half of the clauses are satisfied in expectation. Give an example to show that there are MAX SAT instances such that no assignment satisfies more than half of the clauses.

(b) If we have a clause that consists only of a single term (e.g., a clause consisting just of x1, or just of x2), then there is only a single way to satisfy it: We need to set the corresponding variable in the appropriate way. If we have two clauses such that one consists of just the term xi, and the other consists of just the negated term xi, then this is a pretty direct contradiction.

Assume that our instance has no such pair of "conflicting clauses"; that is, for no variable xi do we have both a clause C = {xi} and a clause C' = {xi-}. Modify the randomized procedure above to improve the approximation factor from1/2 to at least .6. That is, change the algorithm so that the expected number of clauses satisfied by the process is at least .6k.

(c) Give a randomized polynomial-time algorithm for the general MAX SAT Problem, so that the expected number of clauses satisfied by the algorithm is at least a .6 fraction of the maximum possible. (Note that, by the example in part (a), there are instances where one cannot satisfy more than k/2 clauses; the point here is that we'd still like an efficient algorithm that, in expectation, can satisfy a .6 fraction of the maximum that can be satisfied by an optimal assignment.)

Q8. Let G = (V, E) be an undirected graph with n nodes and m edges. For a subset X ⊆ V, we use G[X] to denote the sub-graph induced on X-that is, the graph whose node set is X and whose edge set consists of all edges of G for which both ends lie in X.

We are given a natural number k ≤ n and are interested in finding a set of k nodes that induces a "dense" sub-graph of G; we'll phrase this concretely as follows. Give a polynomial-time algorithm that produces, for a given natural number k ≤ n, a set X ⊆ V of k nodes with the property that the induced subgraph G[X] has at least mk(k-1)/n(n-1) edges.

You may give either (a) a deterministic algorithm, or (b) a randomized algorithm that has an expected running time that is polynomial, and that only outputs correct answers.

Q9. Suppose you're designing strategies for selling items on a popular auction Web site. Unlike other auction sites, this one uses a one-pass auction, in which each bid must be immediately (and irrevocably) accepted or refused. Specifically, the site works as follows.

- First a seller puts up an item for sale.

- Then buyers appear in sequence.

- When buyer I appears, he or she makes a bid bi > 0.

- The seller must decide immediately whether to accept the bid or not. If the seller accepts the bid, the item is sold and all future buyers are turned away. If the seller rejects the bid, buyer i departs and the bid is withdrawn; and only then does the seller see any future buyers.

Suppose an item is offered for sale, and there are n buyers, each with a distinct bid. Suppose further that the buyers appear in a random order, and that the seller knows the number n of buyers. We'd like to design a strategy whereby the seller has a reasonable chance of accepting the highest of the n bids. By a strategy, we mean a rule by which the seller decides whether to accept each presented bid, based only on the value of n and the sequence of bids seen so far. For example, the seller could always accept the first bid presented. This results in the seller accepting the highest of the n bids with probability only 1/n, since it requires the highest bid to be the first one presented.

Give a strategy under which the seller accepts the highest of the n bids with probability at least 1/4, regardless of the value of n. (For simplicity, you may assume that n is an even number.) Prove that your strategy achieves this probabilistic guarantee.

Q10. Consider a very simple online auction system that works as follows. There are n bidding agents; agent i has a bid bi, which is a positive natural number. We will assume that all bids bi are distinct from one another. The bidding agents appear in an order chosen uniformly at random, each proposes its bid bi in turn, and at all times the system maintains a variable b∗ equal to the highest bid seen so far. (Initially b∗ is set to 0.)

What is the expected number of times that b∗ is updated when this process is executed, as a function of the parameters in the problem?

Example. Suppose b1 = 20, b2 = 25, and b3 = 10, and the bidders arrive in the order 1, 3, 2. Then b∗ is updated for 1 and 2, but not for 3.

Q11. Load balancing algorithms for parallel or distributed systems seek to spread out collections of computing jobs over multiple machines. In this way, no one machine becomes a "hot spot." If some kind of central coordination is possible, then the load can potentially be spread out almost perfectly. But what if the jobs are coming from diverse sources that can't coordinate? As we saw in Section 13.10, one option is to assign them to machines at random and hope that this randomization will work to prevent imbalances. Clearly, this won't generally work as well as a perfectly centralized solution, but it can be quite effective. Here we try analyzing some variations and extensions on the simple load balancing heuristic we considered in Section 13.10.

Suppose you have k machines, and k jobs show up for processing. Each job is assigned to one of the k machines independently at random (with each machine equally likely).

(a) Let N(k) be the expected number of machines that do not receive any jobs, so that N(k)/k is the expected fraction of machines with nothing to do. What is the value of the limit limk→∞ N(k)/k? Give a proof of your answer.

(b) Suppose that machines are not able to queue up excess jobs, so if the random assignment of jobs to machines sends more than one job to a machine M, then M will do the first of the jobs it receives and reject the rest. Let R(k) be the expected number of rejected jobs; so R(k)/k is the expected fraction of rejected jobs. What is limk→∞ R(k)/k? Give a proof of your answer.

(c) Now assume that machines have slightly larger buffers; each machine M will do the first two jobs it receives, and reject any additional jobs. Let R2(k) denote the expected number of rejected jobs under this rule. What is limk→∞ R2(k)/k? Give a proof of your answer.

Q12. Consider the following analogue of Karger's algorithm for finding minimum s-t cuts. We will contract edges iteratively using the following randomized procedure. In a given iteration, let s and t denote the possibly contracted nodes that contain the original nodes s and t, respectively. To make sure that s and t do not get contracted, at each iteration we delete any edges connecting s and t and select a random edge to contract among the remaining edges. Give an example to show that the probability that this method finds a minimum s-t cut can be exponentially small.

Q13. Consider a balls-and-bins experiment with 2n balls but only two bins. As usual, each ball independently selects one of the two bins, both bins equally likely. The expected number of balls in each bin is n. In this problem, we explore the question of how big their difference is likely to be. Let X1 and X2 denote the number of balls in the two bins, respectively. (X1 and X2 are random variables.) Prove that for any ε > 0 there is a constant c > 0 such that the probability Pr[X1 - X2 > c√n] ≤ ε.

Q14. Some people designing parallel physical simulations come to you with the following problem. They have a set P of k basic processes and want to assign each process to run on one of two machines, M1 and M2. They are then going to run a sequence of n jobs, J1,..., Jn. Each job Ji is represented by a set Pi ⊆ P of exactly 2n basic processes which must be running (each on its assigned machine) while the job is processed. An assignment of basic processes to machines will be called perfectly balanced if, for each job Ji, exactly n of the basic processes associated with Ji have been assigned to each of the two machines. An assignment of basic processes to machines will be called nearly balanced if, for each job Ji, no more than (4/3)n of the basic processes associated with Ji have been assigned to the same machine.

(a) Show that for arbitrarily large values of n, there exist sequences of jobs J1,..., Jn for which no perfectly balanced assignment exists.

(b) Suppose that n ≥ 200. Give an algorithm that takes an arbitrary sequence of jobs J1,..., Jn and produces a nearly balanced assignment of basic processes to machines. Your algorithm may be randomized, in which case its expected running time should be polynomial, and it should always produce the correct answer.

Q15. Suppose you are presented with a very large set S of real numbers, and you'd like to approximate the median of these numbers by sampling. You may assume all the numbers in S are distinct. Let n =|S|; we will say that a number x is an ε-approximate median of S if at least (1/2 - ε)n numbers in S are less than x, and at least (1/2 - ε)n numbers in S are greater than x.

Consider an algorithm that works as follows. You select a subset S' ⊆ S uniformly at random, compute the median of S', and return this as an approximate median of S. Show that there is an absolute constant c, independent of n, so that if you apply this algorithm with a sample S' of size c, then with probability at least .99, the number returned will be a (.05)-approximate median of S. (You may consider either the version of the algorithm that constructs S' by sampling with replacement, so that an element of S can be selected multiple times, or one without replacement.)

Q16. Consider the following (partially specified) method for transmitting a message securely between a sender and a receiver. The message will be represented as a string of bits. Let ∑ = {0, 1}, and let ∑∗ denote the set of all strings of 0 or more bits (e.g., 0, 00, 1110001∈ ∑∗). The "empty string," with no bits, will be denoted λ ∈ ∑∗.

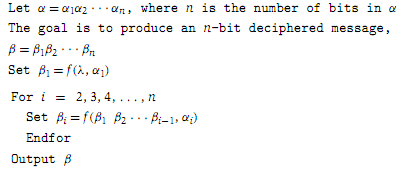

The sender and receiver share a secret function f: ∑∗ × ∑ → ∑. That is, f takes a word and a bit, and returns a bit. When the receiver gets a sequence of bits α ∈ ∗, he or she runs the following method to decipher it.

One could view this is as a type of "stream cipher with feedback." One problem with this approach is that, if any bit αi gets corrupted in transmission, it will corrupt the computed value of βj for all j ≥ i.

We consider the following problem. A sender S wants to transmit the same (plain-text) message β to each of k receivers R1,..., Rk. With each one, he shares a different secret function f(i). Thus he sends a different encrypted message α(i) to each receiver, so that α(i) decrypts to β when the above algorithm is run with the function f(i).

Unfortunately, the communication channels are very noisy, so each of the n bits in each of the k transmissions is independently corrupted (i.e., flipped to its complement) with probability 1/4. Thus no single receiver on his or her own is likely to be able to decrypt the message correctly. Show, however, that if k is large enough as a function of n, then the k receivers can jointly reconstruct the plain-text message in the following way. They get together, and without revealing any of the α(i) or the f(i), they interactively run an algorithm that will produce the correct β with probability at least 9/10. (How large do you need k to be in your algorithm?)

17. Consider the following simple model of gambling in the presence of bad odds. At the beginning, your net profit is 0. You play for a sequence of n rounds; and in each round, your net profit increases by 1with probability 1/3, and decreases by 1 with probability 2/3.

Show that the expected number of steps in which your net profit is positive can be upper-bounded by an absolute constant, independent of the value of n.

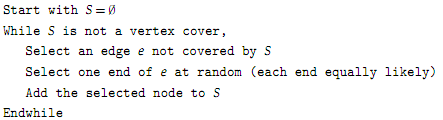

18. In this problem, we will consider the following simple randomized algorithm for the Vertex Cover Algorithm.

We will be interested in the expected cost of a vertex cover selected by this algorithm.

(a) Is this algorithm a c-approximation algorithm for the Minimum Weight Vertex Cover Problem for some constant c? Prove your answer.

(b) Is this algorithm a c-approximation algorithm for the Minimum Cardinality Vertex Cover Problem for some constant c? Prove your answer.

(Hint: For an edge, let pe denote the probability that edge e is selected as an uncovered edge in this algorithm. Can you express the expected value of the solution in terms of these probabilities? To bound the value of an optimal solution in terms of the pe probabilities, try to bound the sum of the probabilities for the edges incident to a given vertex v, namely, ∑e incident to v pe.)