Reference no: EM132371371

Probability Problems - Do the following problems.

1. Consider the minimax criterion for a two-category classification problem.

(a) Fill in the steps of the derivation of Eq. 23:

R(P(ω1)) = λ22 +( λ12 - λ22)∫R_1p(x| ω2)dx +P(ω1)[(λ11 - λ22) + (λ21 - λ11)∫R_2p(x|ω1)dx - (λ12 - λ22)∫R_1p(x|ω2)dx].

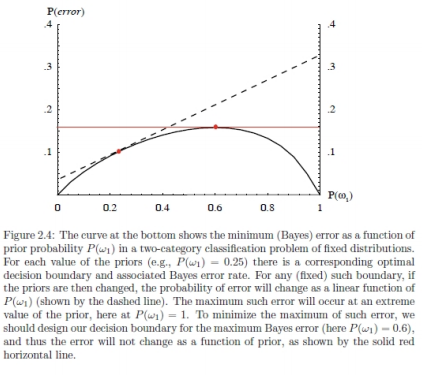

(b) Explain why overall Bayes risk must be concave down as a function of the prior P(ω1) as shown in Fig. 2.4.

(c) Assume we have one-dimensional Gaussian distributions p(x|ωi) ∼ N(µi, σi2), i = 1, 2, but completely unknown prior probabilities. Use the minimax criterion to find the optimal decision point x* in terms of µi and σi under a zero-one risk.

(d) For the decision point x* you found in (c), what is the overall minimax risk? Express this risk in terms of an error function erf(·).

(e) Assume p(x|ω1) ∼ N(0, 1) and p(x|ω2) ∼ N(1/2, 1/4), under a zero-one loss. Find x* and the overall minimax loss.

(f) Assume p(x|ω1) ∼ N(5, 1) and p(x|ω2) ∼ N(6, 1). Without performing any explicit calculations, determine x* for the minimax criterion. Explain your reasoning.

2. Let the conditional densities for a two-category one-dimensional problem be given by the Cauchy distribution

p(x|ωi) = (1/πb)(1/(1+((x-ai)/b)2), i = 1, 2.

(a) By explicit integration, check that the distributions are indeed normalized.

(b) Assuming P(ω1)= P(ω2), show that P(ω1|x) = P(ω2|x) if x =(a1 + a2)/2, i.e., the minimum error decision boundary is a point midway between the peaks of the two distributions, regardless of b.

(c) Plot P(ω1|x) for the case a1 = 3, a2 = 5 and b = 1.

(d) How do P(ω1|x) and P(ω2|x) behave as x → -∞? x → ∞? Explain.

3. (a) Suppose we have two normal distributions with the same covariances but different means: N(µ1, ∑) and N(µ2, ∑). In terms of their prior probabilities P(ω1) and P(ω2), state the condition that the Bayes decision boundary not pass between the two means.

(b) Consider an example on pp. 45-46 of Lecture 3 with general normal distributions for a 3-class problem. For the given distributions derive the equations of the boundaries between classes (the plots of the boundaries are given in the notes). Compute numerically the coordinates of the two points where the boundaries of all three classes meet.

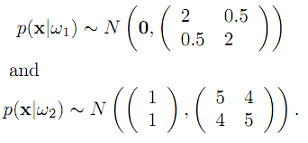

4. Consider a two-category classification problem in two dimensions with

(a) Calculate the Bayes decision boundary.

(b) Calculate the Bhattacharyya error bound.

5. Use the classifier given by Eq. 49:

gi(x) = -½(x - µi)t i∑-1(x - µi) - d/2 ln2π - ½ln|∑i| + lnP(ωi)

to classify the following 10 samples from the table

|

Sample

|

ω1

|

ω2

|

ω3

|

|

x1

|

x2

|

x3

|

x1

|

x2

|

x3

|

x1

|

x2

|

x3

|

|

1

|

-5.01

|

-8.12

|

-3.68

|

-0.91

|

-0.18

|

-0.05

|

5.35

|

2.26

|

8.13

|

|

2

|

-5.43

|

-3.48

|

-3.54

|

1.30

|

-2.06

|

-3.53

|

5.12

|

3.22

|

-2.66

|

|

3

|

1.08

|

-5.52

|

1.66

|

-7.75

|

-4.54

|

-0.95

|

-1.34

|

-5.31

|

-9.87

|

|

4

|

0.86

|

-3.78

|

-4.11

|

-5.47

|

0.50

|

3.92

|

4.48

|

3.42

|

5.19

|

|

5

|

-2.67

|

0.63

|

7.39

|

6.14

|

5.72

|

-4.85

|

7.11

|

2.39

|

9.21

|

|

6

|

4.94

|

3.29

|

2.08

|

3.60

|

1.26

|

4.36

|

7.17

|

4.33

|

-0.98

|

|

7

|

-2.51

|

2.09

|

-2.59

|

5.37

|

-4.63

|

-3.65

|

5.75

|

3.97

|

6.65

|

|

8

|

-2.25

|

-2.13

|

-6.94

|

7.18

|

1.46

|

-6.66

|

0.77

|

0.27

|

2.41

|

|

9

|

5.56

|

2.86

|

2.26

|

-7.39

|

1.17

|

6.30

|

0.90

|

-0.43

|

-8.71

|

|

10

|

1.03

|

-3.33

|

4.33

|

-7.50

|

-6.32

|

-0.31

|

3.52

|

-0.36

|

6.43

|

in the following way. Assume that the underlying distributions are normal.

(a) Assume that the prior probabilities for the first two categories are equal P(ω1) = P(ω2) = ½ and P(ω3) = 0 and design a dichotomizer for those two categories using only the x1 feature value.

(b) Determine the empirical training error on your samples, i.e., the percentage of points misclassified.

(c) Use the Bhattacharyya bound to bound the error you will get on novel patterns drawn from the distributions.

(d) Repeat all of the above, but now use two feature values, x1, and x2.

(e) Repeat, but use all three feature values.

(f) Discuss your results. In particular, is it ever possible for a finite set of data that the empirical error might be larger for more data dimensions?

Textbook - Duda, Hart, and Stork, Pattern Classification, Wiley, 2-nd edition, 2001.

Attachment:- Probability Problems.rar