Example Calculation:

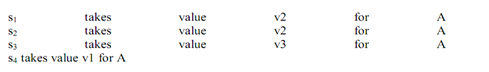

If we see an example we are working with a set of examples like S = {s1,s2,s3,s4} categorised with a binary categorisation of positives and negatives like that s1 is positive and the rest are negative. Expect further there that we want to calculate the information gain of an attribute, A, and A can take the values {v1,v2,v3} obviously. So lat in finally assume that as:

Whether to work out the information gain for A relative to S but we first use to calculate the entropy of S. Means that to use our formula for binary categorisations that we use to know the proportion of positives in S and the proportion of negatives. Thus these are given such as: p+ = 1/4 and p- = 3/4. So then we can calculate as:

Entropy(S) = -(1/4)log2(1/4) -(3/4)log2(3/4) = -(1/4)(-2) -(3/4)(-0.415) = 0.5 + 0.311

= 0.811

Now next here instantly note that there to do this calculation into your calculator that you may need to remember that as: log2(x) = ln(x)/ln(2), when ln(2) is the natural log of 2. Next, we need to calculate the weighted Entropy(Sv) for each value v = v1, v2, v3, v4, noting that the weighting involves multiplying by (|Svi|/|S|). Remember also that Sv is the set of examples from S which have value v for attribute A. This means that: Sv1 = {s4}, sv2={s1, s2}, sv3 = {s3}.

We now have need to carry out these calculations:

(|Sv1|/|S|) * Entropy(Sv1) = (1/4) * (-(0/1)log2(0/1) - (1/1)log2(1/1)) = (1/4)(-0 -

(1)log2(1)) = (1/4)(-0 -0) = 0

(|Sv2|/|S|) * Entropy(Sv2) = (2/4) * (-(1/2)log2(1/2) - (1/2)log2(1/2))

= (1/2) * (-(1/2)*(-1) - (1/2)*(-1)) = (1/2) * (1) = 1/2

(|Sv3|/|S|) * Entropy(Sv3) = (1/4) * (-(0/1)log2(0/1) - (1/1)log2(1/1)) = (1/4)(-0 -

(1)log2(1)) = (1/4)(-0 -0) = 0

Note that we have taken 0 log2(0) to be zero, which is standard. In our calculation,

we only required log2(1) = 0 and log2(1/2) = -1. We now have to add these three values together and take the result from our calculation for Entropy(S) to give us the final result:

Gain(S,A) = 0.811 - (0 + 1/2 + 0) = 0.311

Now we look at how information gain can be utilising in practice in an algorithm to construct decision trees.