Architecture Of Neural Network

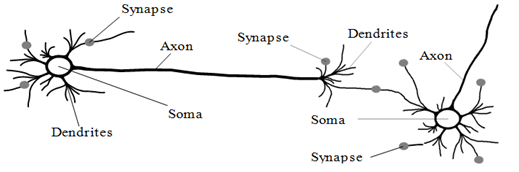

A neural network is the method that seeks to build an intelligent program as to implement intelligence by using models that simulate the working network of the neurons in the human brain. A neuron is made up of some protrusions named as dendrites, and a long branch called axon. These entities are utilized to pass and receive information to other neurons. The neurons are connected along with synapses to form a basic bio-computational system. Theoretically, the meaning of connections is interpreted as the relations among the neurons. Given diagram illustrates the basic architecture of connections and neurons. The number of connections in between a network is so large that it provides the network along with sophisticated capabilities as like: logical deviation, objective perceptrons in natural scenes, and etc.

Diagram of: Basic Architecture of Neurons and Connections

The axon and dendrites are the channels for transmitting and receiving information. The synapses process stimuli and limitations. By neurons, the reaction signal is produced and computed. This is understood that the whole procedure of information sharing and processing is conducted in three steps as:

- Receiving the information.

- Processing the information.

- Responding to the information.

The processes remain repeats until the network reaches an exact response towards the stimuli.

Neural network have been widely utilized in pattern recognition and classification tasks as like vision and speech processing. Their benefits over traditional classification schemes and expert systems are as:

- Multiple constraints' parallel consideration

- Capability for continued learning via the life of the system

- Graceful degradation of performance

- Capability to learn arbitrary mappings between output and input spaces.

Indeed, neural network computing displays great potential in performing complex data processing and data interpretation tasks. Neural networks are modeled after neuro-physical structures of human brain cells and the connections in between those cells. Such networks are characterized by exceptional types and learning capabilities.

Neural networks differ from mainly other classes of AI tools in that the network does not needs clear-cut rules and knowledge to perform tasks. The magic of neural network is the capability to make reasonable generalizations and perform reasonably on patterns such have never before been presented to the network. They learn problem resolving procedure by "characterizing" and "memorizing" the special features associated along with each training case and example, and "generalizing" the knowledge. Internally, such learning process is done by adjusting the weights tagged to the interconnections among those nodes of a network. The training can be completed in batches or alone or individually in an incremental mode.

Neural networks are motivated by the biological systems whether large numbers of neurons, which individually function rather gradually, collectively perform tasks at amazing speeds that even the most advanced computers cannot match. These neurons are made of a number of easy processors, connected to one another by adjustable memory elements. All connection is associated along with a weight and weight is adjusted by experiences.

In between the more interesting properties of neural networks is their capability to learn. Neural networks are not the merely class of structures that learn. It is their learning ability coupled along with the distributed processing inherent in neural network systems that different these systems from others.

Because neural networks learn, they are distinguish from current "AI" expert systems in that these networks are much more flexible and adaptive: they can be thought of like dynamic repositories of knowledge.

Researchers have long been felt as like the neurons are responsible for the human capacity to learn, and this is in this sense that the physical structure is being emulated by a neural network to accomplish machine learning. All computational units compute some function of its inputs and pass the results to connected units in the network. The systems knowledge comes out of entire network of the neurons.

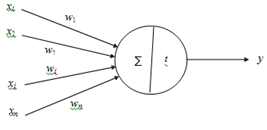

Following diagram shows the analog of a neuron as a threshold element. The variables x1, x2, . . . , xi, . . . , xn are the n inputs to the threshold element. Such are analogous to impulses arriving from various different neurons to one neuron. The variables w1, w2, . . . , wi, . . . , wn are the weights associated along with the impulses/inputs, satisfying the relative significance that is associated along with the path from which the input is coming.

While wi is positive, input xi acts as an excitatory signal for the element. While wi is negative, input xi acts like an inhibitory signal for the element. The threshold element sums the product of these inputs and their related weights (∑ wixi ) , compares this to a prescribed threshold value and, if the summation is greater than the threshold value, computes an output by using a nonlinear function or F. The signal output y is non-linear function as F of the difference in between the threshold value and the preceding computed summation and is expressed:

y = (∑ wixi - t ).............................. Eqn. (2)

Whereas xi = signal output (i = 1, 2, . . , n),

wi = weight realted with the signal input xi, and

t = threshold level prescribed by user Error!

Diagram of: Threshold Element as an Analogous to a Neuron

Learning rules define that network parameters as weights, number of connections, thresholds, etc. modify over time and in what way.

The node obtains input from other nodes throughout weighted connections or links. The input node is activated by the whole effect of the weighted signals. The node's output is calculated by processing the whole sum of those weighted signals throughout a function, usually a sigmoid function. Output signals travel along other weighted connections to connected nodes.

The simplest form of this neural network is the two layer concerned network. As the name shows, there are only two layers of nodes in each concerned network: output and input. The input patterns arriving at the input layer are mapped directly to a set of output pattern at the output layers. There are no hidden nodes; which is, there is no internal representation. These easy structured networks have proved practically helpful in a variety of applications. Of course, the essential characteristics of such network are that mapping is finished from similar input patterns to easy output patterns.

Researchers have found that the procedure for neural networks to respond to the external stimulus is electrical reactions. Earlier the years, researchers have tried to simulate a neural system by utilizing physical devices, as like: electrical circuits, wires, and resisters. The Hopfield Neural Network or HNN is a prototype simulated neural system such possesses an extremely efficient coupling capability.

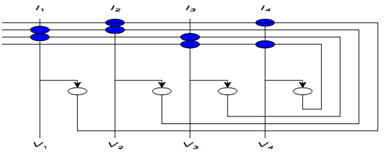

A HNN has units as like: neurons, dendrites, synapses, and an axon, which are made of electrical devices. The functions and implicit meanings of these are exactly the similar as they are in bio-neural system. The architecture of HNN is shown in following diagram. The basic units are implemented as given below as:

- Parallel input subsystem as dendrites Ii, i = 1, 2, 3, 4

- Parallel output subsystem as axon Vi, i = 1, 2, 3, 4

- Interconnectivity subsystem as synapses, the circuit in the schematic

The neurons are constructed along with electrical amplifiers in conjunction along with the above mentioned subsystems so like to simulate the basic computational features of the human neural system. The procedure for the HNN to process the external stimuli is as same to that of its biological counterpart. The functions of processing, receiving, and responding to the stimuli are translated into three main electrical functions performed by the HNN. These functions are as:

- function of Sigmoid

- function of Computing

- function of Updating

Diagram of: Schematic of a Simplified Four Neuron HNN

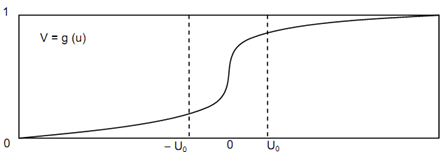

Sigmoid function is a non-linear increasing mathematical curve as shown in following diagram. This function explained how a neuron reacts to the external signal and generates its initial excitability value. The computing function, involving all essential information about its procedure for producing a reaction signal, is the main processor that processes the external information. The updating function prompts out the reaction signals towards the stimuli and passes these signals to the network to adjust the neurons' reactions. For itself the HNN is capable to provide an exact response toward the external stimuli and settle into a stable state.

Diagram of: Schematic of a Simplified Four Neuron HNN

Three layered perceptrons eption architecture is used now for illustration purposes. The layers are organized into a feed forward system, along with each layer having full interconnection to the next layer, however no connection inside a layer, nor feedback connections to the prior layer. The first layer is the input layer, whose units get on the activation equal to corresponding network input values. The second layer is referred to like a hidden layer, since its outputs are utilized internally and not considered as outputs of the network. The last layer is the output layer. The activations of output units are considered the response of the network.

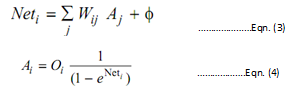

The processing functions are as given:

Where φ is a unit basis as similar to a threshold

Short-term operation of the network is directly or straight forward. The input layer unit activations are set equal to the subsequent elements of an input vector. These activations propagate to the hidden layer throughout weighted connections and are processed according to the functions above. The hidden layers outputs then propagate to the output layer are again processed by the above functions. The activations of the output layer units form the response of network pattern.

We provide a brief analysis and overview of the perceptrons architecture to imply how classification occurs inside the network. The analysis is specified with respect to employ of a threshold unit activation function, quite than sigmoid function of eq. 4. The basic operation of the network is same for both functions.

An output or hidden unit utilizing a threshold function is either entirely deactivated or completely activated, depending on the state of its inputs. All units is capable of deciding to which of two different classes its current inputs belong and may be perceived like forming a decision hyperplane throughout the n-dimensional input space. The orientation of such hyperplane depends on the value of the connection weights to the units.

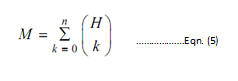

All units thus divide the input space into two regions. Though, many more regions and of much more complex shape such can be presented by considering the decisions of all hidden units simultaneously. The maximum number of regions M represent by H hidden nodes having n inputs each is described by

The actual number of presentable regions will likely be lower, depending on the efficiency of the learning algorithm as note that if two units share the same hyperplane, or if three or more hyperplane share a usual intersection point, the number of representable classes will be decreased.

All units in a hidden layer may be seen as classifying the input according to micro-features. Additional hidden layers and the output layer then next classify the input according to higher level features composed of sets of micro-features. For instance, a two point unit perceptrons with no hidden layers cannot learn the exclusive "OR" classification of its inputs, because this requires classifying two disjoint regions in the input space into the similar class. The addition of hidden layer results in formation of a higher level feature that integrates two disjoint regions into one, allowing correct classification.

The performance of a network employing a sigmoid unit activation function may be interpreted in a similar fashion. However the sigmoid function, involves "fuzziness" into the decision making of a unit. The unit no longer divides input space into two crisp regions. Such fuzziness propagates throughout the network to the output.

However, the goal of classification system is to make a decision regarding its inputs; therefore it is essential to introduce a threshold decision at any point in the system. In our system, the output nodes employ an output function different from the activation function

:

Oi = 1 if Ai > t and Ai = maxj {Aj} .................Eqn. (6.)

= 0 otherwise whereas, t is a threshold value.