Basic Concept of Data Parallelism

Thinking the condition where the same problem of submission of „electricity bill? is Handled as follows:

Again, three are counters. However, now each counter handles all the actions of a resident in value of submission of his/her bill. Again, we considering that time compulsory to submit

One bill form is the same as previous, i.e., 5+300+5=310 sec.

We suppose all the counters operate concurrently and each peoples at a counter takes 310 seconds to development one bill. Then, time taken to development all the 10,000 bills will be 310*(9999/3)+310*1sec

This time is relatively much less as compared to time taken in the previous situations, viz.. 3000000 sec and 3100000 sec respectively.

The condition discussed here is the idea of data parallelism. In data parallelism, the entire set of data is manipulated into many blocks and operations on the blocks are applied comparable. As is obvious from this example, data parallelism is much faster as compared to previous situations. Here, no synchronisation is necessary between processers (or counters). It is more liberal of faults. The working of one person didn't affect the other person. There is no message required between processors. Thus, interprocessor message is less. Data parallelism has certain drawbacks. These are as given:

i)The action to be performed by every processor is predefined i.e., load of assignment is static.

ii) It should be probable to split the input task into mutually exclusive tasks. In the given instance, space would be crucial counters. This requires multiple hardware which may be expensive.

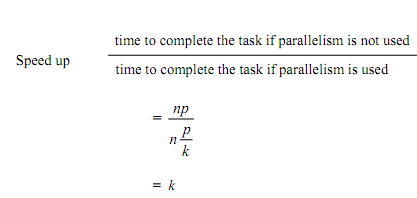

The opinion of speedup achieved by using the on top of type of parallel processing is as given:

We assume the number of jobs = m

Let assume the time to do a job = p

If each job is separated into k tasks,

Assuming task is ideally separable into activities, as mentioned over then, Time taken to complete one task = p/k

Time taken to finish n jobs without parallel processing = n.p

Time to finish n jobs with parallel processing = n*p/k